Learn how you can Unlock Limitless Customer Lifetime Value with CleverTap’s All-in-One Customer Engagement Platform.

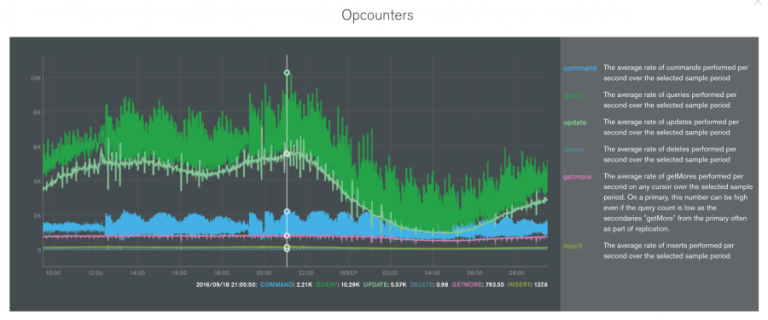

We have been using MongoDB 2.6 with MMAPv1 as the storage engine for the past two years. It’s been a stable component in our system until we upgraded to 3.0 and promoted secondaries configured with WiredTiger as the storage engine to primary. To put things in context, we do approximately ~18.07K operations/second on one primary server. We have two shards in the cluster so two primary server making it ~36.14K operations/second. This represents a small fraction of our incoming traffic since we offload most of the storage to a custom build in-memory storage engine

WiredTiger promises faster throughput powered by document-level concurrency as opposed to collection level concurrency in MMAPv1. In our quick tests before upgrading in production, we saw a 7x performance improvement. Jaw dropped, we decided to upgrade the following weekend. We were going to do this in phases

1. Upgrade cluster metadata and Mongo binaries from 2.6 to 3.0. Sleep for 3 days

2. Re-sync a secondary with WiredTiger as the storage engine and promote it as the primary. Sleep

3. Change config servers to WiredTiger. Sleep

4. Upgrade existing MONGODB-CR Users to use SCRAM-SHA-1. Sleep

With so much sleep factored in, we’re hoping to wake up sharp 🙂

Phase #1 – Binary upgrade was executed like clockwork on Saturday morning within an hour. In the mean time all incoming traffic was queued and processed after the upgrade. Monday night, I begun re-syncing the secondary server with WiredTiger as the storage engine

So far so good

Phase #2 – On Tuesday, we stepped down our primary nodes to let the WiredTiger powered secondary nodes begin serving production traffic. Within minutes we had profiling data that showed that our throughput had indeed increased

At this point we could possibly throw 7x more traffic at MongoDB without affecting throughput. WiredTiger stayed true to its promise. With a couple of hours in production we decided to re-sync the old MMAPv1 primary nodes which were now secondary with storage engine set to WiredTiger. We’re now running with one single functional data node (primary) in the replica set.

MongoDB’s throughput plunged to about 1K operations/second within a few minutes. It felt like MongoDB came to a halt. This was the beginning of the end of our short, wild ride with WiredTiger. Scrambling to figure out what happened, our monitoring system reported that Mongos nodes were down. Starting them manually brought a few minutes of relief. Mongos logs seem to say:

2016-MM-DDT14:29:55.497+0530 I CONTROL [signalProcessingThread] got signal 15 (Terminated), will

terminate after current cmd ends

2016-MM-DDT14:29:55.497+0530 I SHARDING [signalProcessingThread] dbexit: rc:0

Who/what send SIGTERM is still unknown. System logs had no details on this. Few minutes later, Mongos decided to exit again.

By this time, we all jumped into the war room — Mongod nodes along with Mongos were restarted. Things looked stable and we had some time to regroup and think about what just happened. A few more mongo lockups later, we identified based on telemetry data, that MongoDB would lock up every time WiredTiger’s cache was 100%. Our data nodes were running on r3.4xlarge (120GB RAM) instances. By default MongoDB allocated 50% of RAM for WiredTiger’ cache. Over time as the cache filled to 100%, it would come to a halt. With the uneasy knowledge of imminent lock ups showing up every few hours and a few more lockups in the middle of the night, we moved to r3.8xlarge (240GB RAM, thank you god for AWS). With 120G of cache, we learnt that WiredTiger stabilised at 98G cache for our workload and working set. We still didn’t have a secondary node in our replica set because initiating the re-sync would push the cache to 100% and bring MongoDB to a halt. Another sleepless night later we got MongoDB data nodes to run on a x1.32xlarge (2TB RAM). Isn’t AWS awesome? With 1TB of cache, we were able to get our secondary nodes to fully re-sync with storage engine set to MMAPv1 so that we could revert and get away from WiredTiger and its cache requirement. MMAPv1 had lower throughput, but was stable and we had to get back to it ASAP

We were too quick to start re-syncing with storage engine set to WiredTiger, if were fully in sync, we could have promoted the secondary running with MMAPv1 as the primary and avoided all the sleepless nights

Because of the frequent updates and inserts, our oplog could hold a few hours worth of data during normal production time. Only at night would we have enough hours of data to be able to re-sync and catch up using the oplog. This meant that we had to re-sync only during the night

Based on our experience, WiredTiger is true to its promise of 7x to 10x throughput improvement but locks up when cache hits 100%. We have been able to reproduce this with the latest version (3.2.9 at the time of writing) and are in the process of filing a bug report. We are committed to provide more information to help solve this issue. As it stands — WiredTiger storage which is the default in the latest version of MongoDB is unstable once the working set exceeds configured cache size. It will bring production to a halt

As a closing thought, if you are evaluating MongoDB or using it, think about disaster recovery. While firefighting, we realized just how difficult it is to restore from a filesystem snapshot backup or setup a new cluster if you have data in a shared cluster. Exporting all data from each node and importing it into a new cluster is extremely slow for a dataset of ~200G per node. In our case, it would take ~36 hours

UPDATE #1:

We made a boo boo in representing the numbers we put out. It’s operations/second (literally the worst mistake you could make) instead of operations/minute as stated in the opening paragraph. I have updated this post to reflect the change

UPDATE #2:

– All things being equal, WiredTiger should’ve at least had the stability of MMAPv1 considering that it’s the default storage engine option from Mongo 3.2 onwards

– Each time we restarted the data nodes, WiredTiger would work fine for a couple of hours and then freeze. On digging deeper we noticed that it would freeze when the cache reached 95% capacity. So clearly there’s some cache eviction issue at play here