We have been experimenting with agile, faster and reliable ways to work with Auto Scaling to minimise last minute surprises, while scaling up to handle daily peaks of ~116K requests/second. Along the way, here is what we learnt:

1. Respond as fast as possible

Read the incoming request, reference an in-memory system (some cache server/service or an in-memory database) and respond. If you have to spin a disk or find data on an EBS volume you are limited by its throughput. Scaling user facing frontend in response to increased traffic will increase load upstream in the stack — complicating things further.

For write-heavy incoming workloads, park the data in an in-memory store and queue it for processing. Elastic LoadBalancer graphs latency (time between sending the request and the receiving the headers of the response)- Use it to measure request processing time. This should give you a fair idea of how much you have to provision to handle a certain amount of incoming traffic and application response time.

2. Ensure consistent bootstrap at instance launch

As instances are launched by a scale-up policy, we need to guarantee that these new instances can serve traffic as soon as they’re up. Instances that are bootstrapped incompletely are no good. In our case, we use CodeDeploy to minimize the number of moving parts required to bootstrap an instance. Lesser moving parts means we have confidence in newly spawned instances. It hooks into instance launch lifecycle and triggers deployments of the last successfully deployed version of your application/bootstrap code.

You can get CodeDeploy to do arbitrary things at different deployment lifecycle stages. Stay away from making external network calls ( to package repositories etc) in a deployment. A change in upstream dependency can cause a deployment that worked before to fail. This is why we package our application in a Docker container. It ensures that the application’s execution environment is consistent and that there are no external dependencies when trying to setup its environment and run during a scale up event.

Alternatively, we could bake the application inside an AMI, but we choose not to because we deploy six to eight times every day. That’s too many duplicate bits to pay for. This is one of the things that Docker is supposed to solve. Besides, we have lesser number of packages to deal within a container than an AMI. This reduces our attack vectors as the number of exploitable services/packages are lesser.

3. Monitor instances using ELB health checks

Auto Scaling monitors the health of instances in the group and can replace instances that are unhealthy. It can be configured to use either EC2 or ELB health monitoring to qualify the state of an instance. Using ELB monitoring enables Auto Scaling to replace instances that do not respond to application health checks. If your app stops responding, the instance is replaced. No more manual restarts in the middle of the night or in the middle of a massive traffic spike.

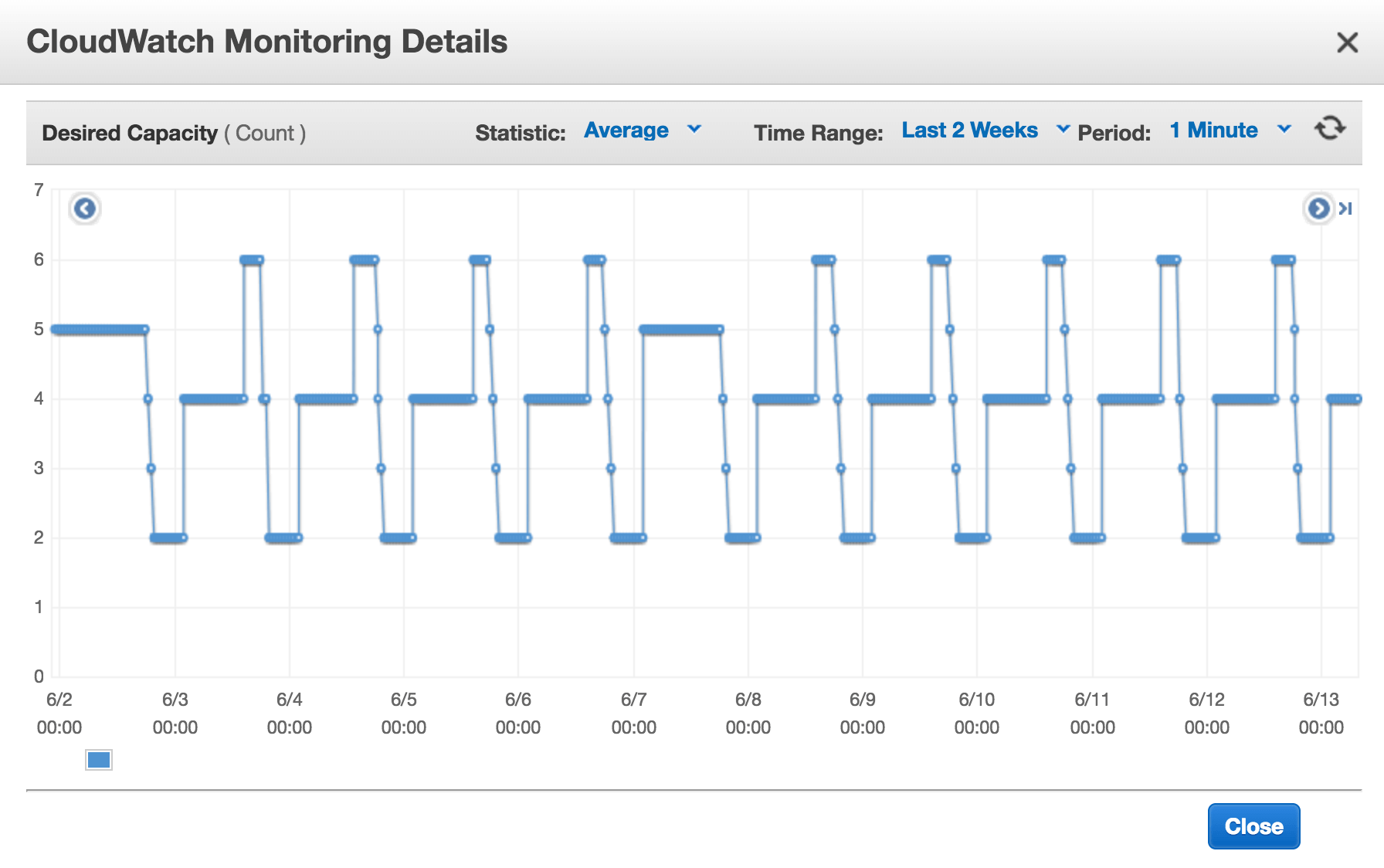

4. AutoScaling on complex metrics

Other than the parameters provided by EC2, ELB or Auto Scaling Group, you can write custom metrics to CloudWatch and use it in a scale up or down.

5. Gracefully handling EC2 instance termination

Our monitoring systems would think instances terminated by an Auto Scaling Group are down – triggering a notification alert to the engineering team. This quickly caused notification fatigue. We put in place a handler for instances managed by an AutoScaling Group that would delete the host instead of notify. This works very well for us; instances are spawned, they are automatically monitored and when its time, they are automatically deleted.

6. Save some money while you are at it

While Auto Scaling itself saves a ton of money, you can juice it further to select instances that are closest to next instance hour for termination. This termination policy give you a maximum bang for your buck.

Have you come across other Auto Scaling tricks that reduce manual intervention, improve reliability and help keep production responding ? Do let us know.

Francis Pereira

Francis Pereira, a founding member at CleverTap, leads the security and infrastructure engineering teams, focusing on infrastructure automation, security, scalability, and finops.

Free Customer Engagement Guides

Join our newsletter for actionable tips and proven strategies to grow your business and engage your customers.