Mobile publishers fantasize about rocketing to the top charts of app stores as if by magic. But any App Store Optimization specialist will tell you that it doesn’t just happen — it’s a result of a high-quality product, robust marketing strategies, and careful App Store Optimization.

Every app publisher needs to understand the importance of smart App Store Optimization for driving downloads and conversions.

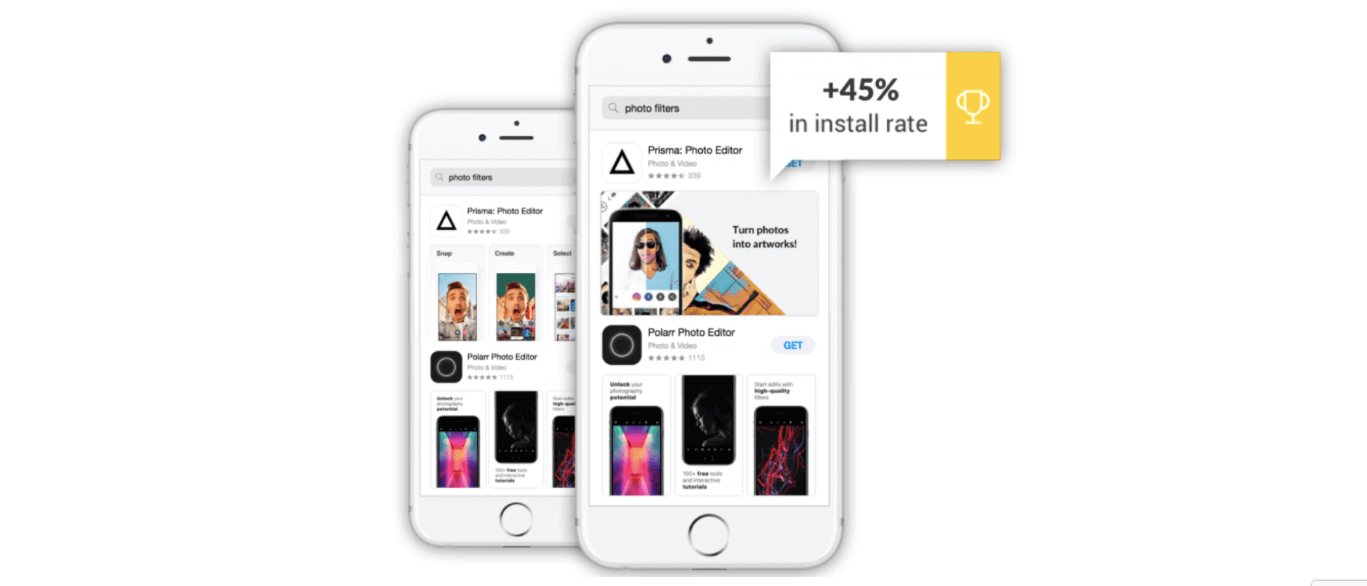

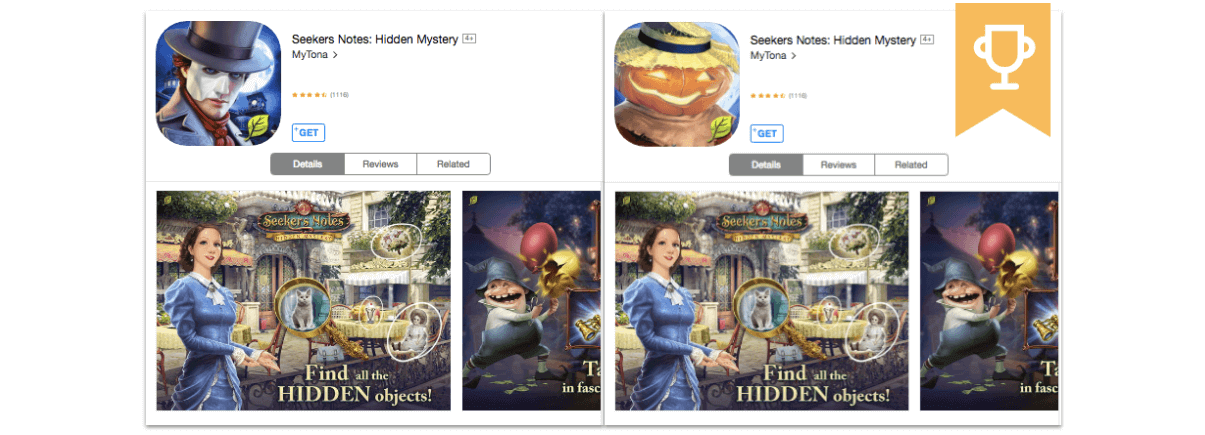

ASO isn’t just about keyword optimization. Sure, it’s dangerous to underestimate the impact of expertly tailored keywords. But it’s equally risky to neglect important product page elements like screenshots, icons, and video previews.

Image: Splitmetrics

Data-driven decisions are impossible without intelligent A/B testing, and that’s especially true when it comes to App Store Optimization. If you want your app to make it to the top, you can’t rely on guesswork or gut feelings.

At the same time, mindless experiments will lead you nowhere. That’s why it’s so important to define specific goals, adhere to a scientific process, and incorporate the following best practices.

Product Page A/B Testing Basics

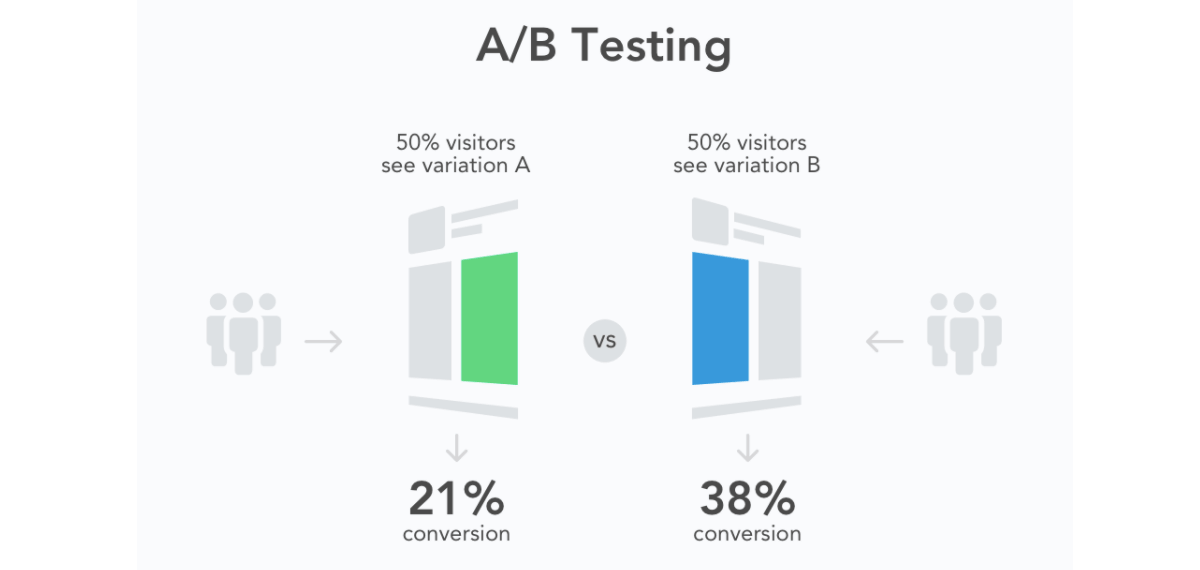

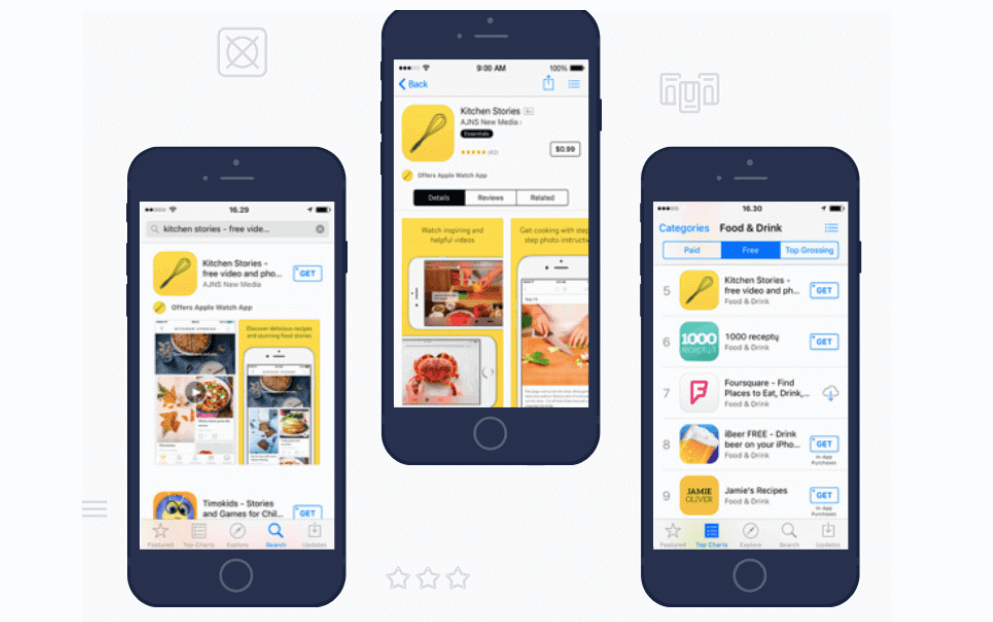

Put simply, A/B testing compares two or more variants on your product page to find out which performs better. This could be your description copy, screenshots, icon, or any other page element. Keep in mind that you’ll need to distribute traffic equally between variants in order to get accurate results on performance and conversions.

Image: Splitmetrics

A/B tests not only help mobile publishers improve conversion rates, they also help evaluate different audience segments, traffic channels, and product positioning. Split testing during your app’s pre-launch stage is also a great way to refine your product and marketing strategy.

Pre-A/B Testing Phase

Effective A/B testing is impossible without thorough preparation. Formulating a new experiment must include two extremely important tasks:

- Research and analysis

- Establishing a hypothesis

Research and Analysis

Learn everything you can about your market, competitors, and target audience:

- Review app store product pages of your main competitors

- Identify the best practices in your app category

- Study techniques that are popular among industry leaders

- Consider which trends can be incorporated into your product page layout

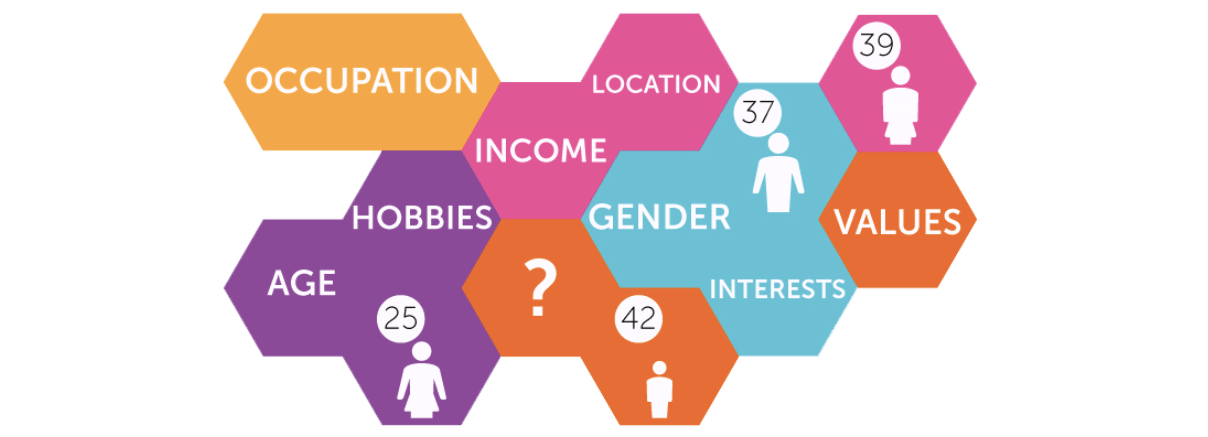

One more factor that can’t be neglected is identifying your target audience. Every detail matters – from basic demographics like age and gender to interests and preferences.

Image: score87

The more you know about your users, the easier it will be to tailor your app store creatives and campaigns to appeal to them. And consistent A/B testing can help you narrow in on your ideal user by creating different ad groups and seeing which user segment is most successful.

Once you’ve zeroed in on your ideal user, you can evaluate traffic sources. Use A/B testing to run identical campaigns across different ad networks to find out which traffic channel performs best.

According to more than 15,000 experiments launched with SplitMetrics A/B testing platform, Facebook and Adwords are the best traffic channels.

When evaluating channels, be careful to watch for:

- Fraud or low-quality traffic, which results in lots of filtered visitors

- For cross-promotional campaigns, you’ll need huge amounts of traffic to see meaningful results

- Delayed results when using smart banners for A/B experiments. The average test duration is 1-2 months

Formulating a Sound Hypothesis

Without careful research and a solid hypothesis, your A/B test won’t give you actionable insights. An effective hypothesis boils down to 3 key ingredients:

- What should be changed

- The expected results of the change(s)

- Why you anticipate those results

Image: GameAnalytics

Here’s an example of a good hypothesis from an actual A/B test:

Putting a character with an open mouth on my gaming app icon will convert better, since this ‘action mouth’ strategy is successfully used by industry leaders.

MyTona used this hypothesis in an A/B test for their game Seekers Notes: Hidden Mystery. And the hypothesis was proven right: the pumpkin head with the action mouth performed nearly 10% better than the control.

Image: SplitMetrics

Note that testing nearly identical variations is a waste of time and traffic. Slight head posture changes of the same character doesn’t make a good hypothesis. It’s better to play around with various characters and their facial expression, background colors, app store screenshots, orientation, etc.

Your hypothesis will help you choose the best type of split-test to use:

- Product page tests: Extremely useful for publishers whose strategy rests on high volumes of paid traffic

- Search results page tests: Vital for apps that rely on organic traffic and marketers that want to improve discoverability

Image: Splitmetrics

A/B Testing Cornerstones: Traffic and Targeting

Once the layouts of your variations are ready, you can get down to A/B testing itself. Many marketers rely on A/B testing tools like SplitMetrics to minimize the margin of error and focus on two split testing cornerstones: traffic and targeting.

You may be wondering, “How much traffic do I need for a meaningful test?” Unfortunately, there’s no standard answer to this question. It all depends on your specific app, and various factors such as:

- Traffic source. Every traffic source has a different converting power. An unknown ad channel might swarm with bots, resulting in considerably larger amounts of required users compared to a source like Facebook.

- The average conversion of your product page in major stores. The higher your app’s conversion rate, the smaller traffic volume you need.

- Targeting. Quality ad channels offer advanced targeting options, which eventually lead to higher conversions of the store page under the test.

Normally, publishers need at least 400-500 users per variation to get statistically significant results. 800-1,000 visitors are needed for search or category tests. Just remember: these figures are relative and each experiment is unique. You can always use a sample size calculator, like this one by Optimizely.

Targeting is another incredibly impactful aspect of A/B testing that demands close attention since it’s a key factor in getting statistically significant results. Assuming you’ve done your homework in the research phase, fine-tuning the targeting for your tests should be pretty straightforward.

Image: Splitmetrics

Specialized A/B testing platforms can filter irrelevant traffic, such as:

- Users that don’t correspond to the requirements (for example Google Play users visiting App Store tests)

- Mistaps and misclicks

- Bots and fraudulent traffic

- Repetitive visits

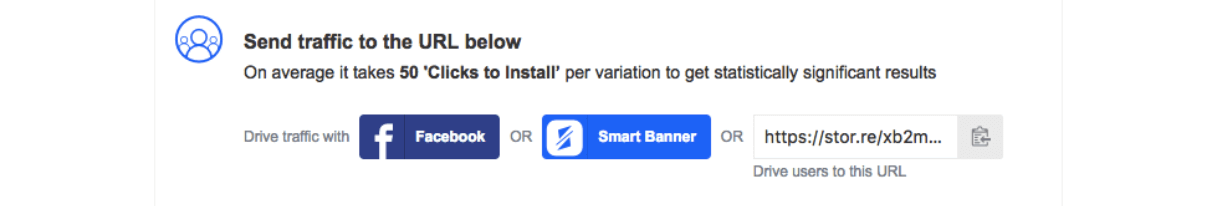

Completing A/B Experiments

We recommend running an experiment for 7-10 days before finishing it. This way, you’ll be able to track user behavior for each weekday, and you’re more likely to get statistically significant results. It normally takes at least 50 ‘Clicks to Install’ per variation.

Image: Splitmetrics

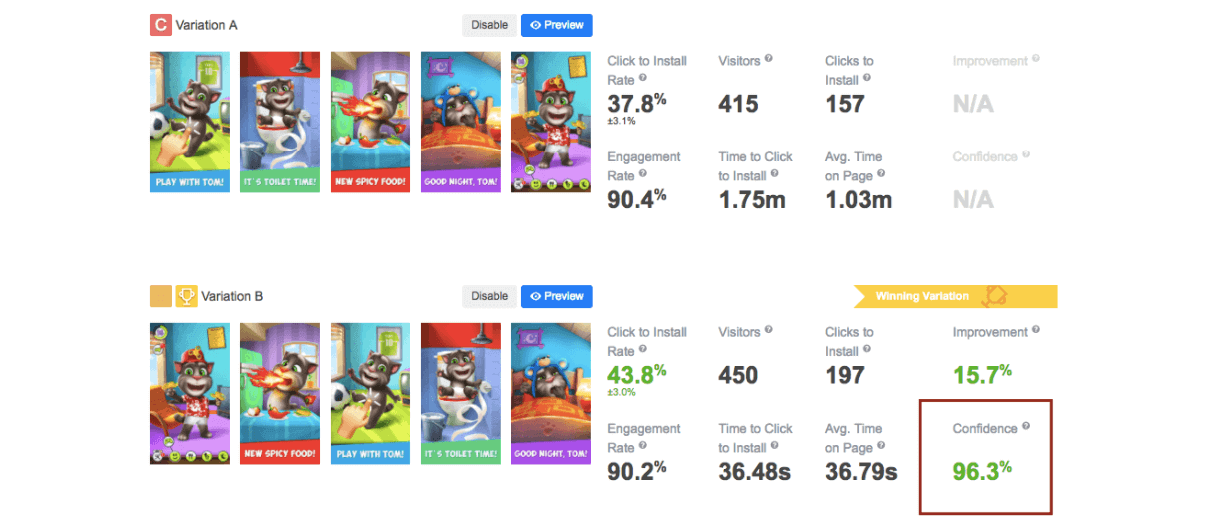

Many publishers tell us that analyzing A/B test results is their favorite part of the process. It can be pretty exciting to find out whether your hypothesis was proven right, and try to rationalize the results. But prepare yourself for the very real possibility that the majority of your assumptions are proven wrong.

There are no negative results when it comes to A/B testing. Even seemingly disastrous test results carry valuable takeaways that help mobile marketers boost an app’s performance in the stores, or at the very least prevent them from making misguided decisions about campaign strategies.

If your test identifies an obvious winner, you can update your app store page right away. But if your new layout wins by 1% or less, the chance of seeing a conversion boost shrinks to zero. Only an advantage of at least 3-4% will improve your app’s store performance.

Image: Splitmetrics

App Store A/B Testing Best Practices

To make the most of your A/B testing efforts, observe the following best practices:

- Test only one hypothesis per experiment.

- Statistically significant results require decent traffic and careful targeting.

- Your variation should look as close to the actual store pages as possible.

- Remember that every app store is a dynamic ecosystem. One successful experiment is not enough. Be prepared to conduct multiple follow-up tests to scale results.

It makes sense to plan each step of your A/B testing strategy in advance. Use the classic App Store A/B testing timeline:

- Research and analysis

- Brainstorm variations

- Design variations

- Launch A/B test

- Evaluate results

- Implement alterations and track results

- Conduct a follow-up test

A/B testing might seem easy, but it takes persistence and patience. But if you’re ready to commit, the efforts will pay off in a big way. Split experiments can not only skyrocket your conversions for paid and organic traffic, but also reinforce your marketing strategy with useful insights and vital metrics.

Shivkumar M

Head Product Launches, Adoption, & Evangelism.Expert in cross channel marketing strategies & platforms.

Free Customer Engagement Guides

Join our newsletter for actionable tips and proven strategies to grow your business and engage your customers.