Today, we have more computing power in a single smartphone than the entire NASA space program used to put a man on the moon.

Moore’s Law, first observed in 1965, predicted that computers would get smaller and more powerful every two years. This culminated with the smartphone.

As our devices are decreasing in size and increasing in speed, we are finding that the amount of computing speed (measured in operations per second) needed to train artificial intelligence is actually increasing at a much faster rate.

This increase in computational requirements has also increased the consumption of energy by orders of magnitude.1 What is it about artificial intelligence that is causing this increase in the need to store and compute data?

In this article, we introduce artificial intelligence, how mobile AI is progressing, and how marketers can use this cutting-edge technology through examples of real-world applications. Continue reading or jump to our infographic.

What is Artificial Intelligence?

Artificial intelligence is the ability for machines to take unstructured data to analyze, infer conclusions, and perform tasks autonomously.

Machine learning, or ML, is the real concept driving modern AI forward. Training models are developed to process an increasing amount of data, to learn, and to improve their ability to perform the intended task. In ML the motto is, “the more data the better.”

For example, in order for the DeepMind team to train its AlphaGo model to beat the world’s best human Go players, they actually enabled the AI to train itself. That’s right, AlphaGo is a self-taught grandmaster. The AI played millions of games against itself to learn and continually improve.2

Why is AI Moving To Mobile?

You may be asking yourself, “how can this much data possibly be stored and computed on my phone when I don’t even have enough space for all my video files or to download an app?”

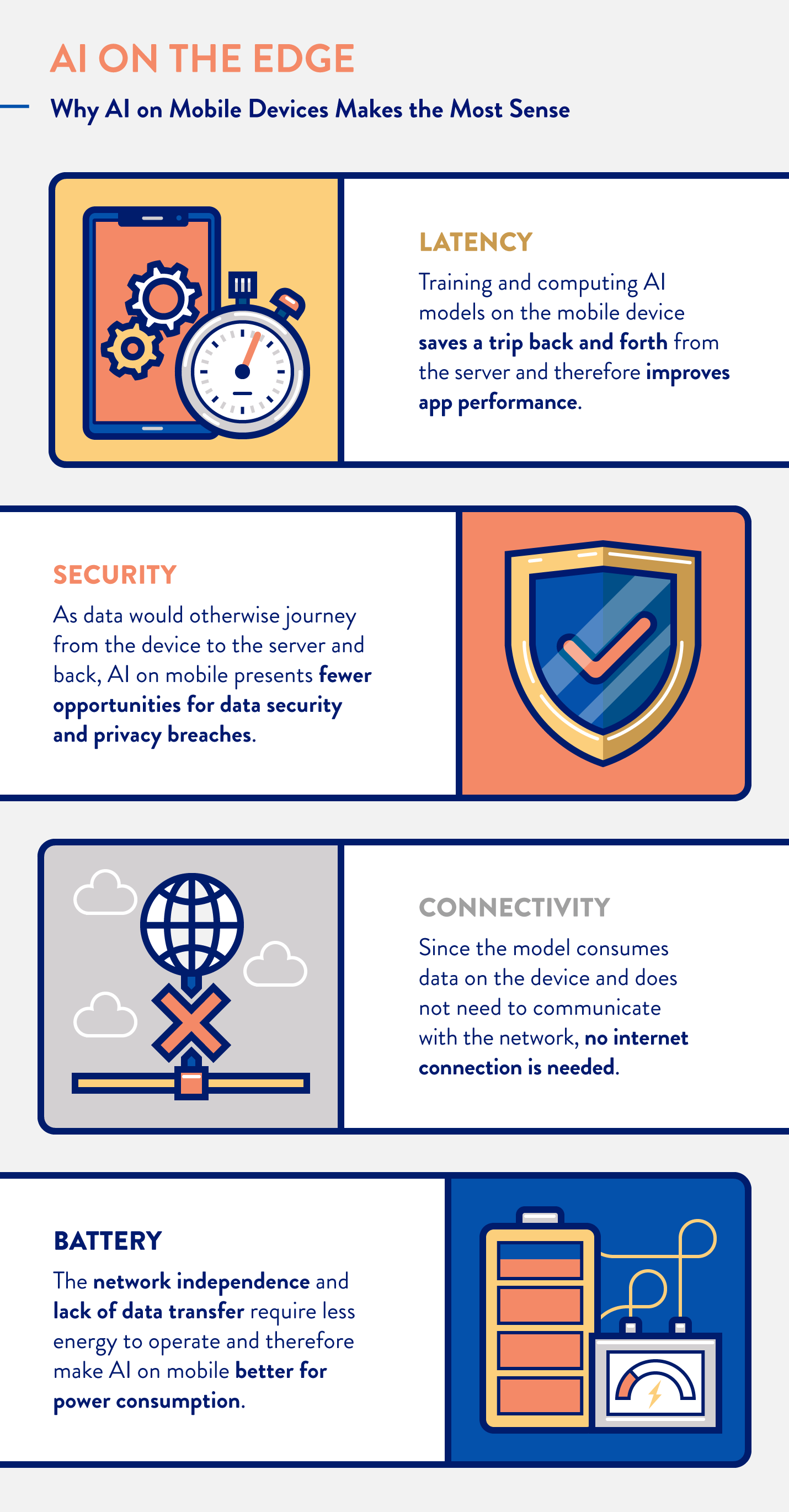

Despite the limited processing, storage, memory, and power that mobile devices offer AI applications, running machine learning models on the mobile device itself makes sense for a number of reasons.

When data is collected on the device, but then transferred to the cloud for computation only to be transferred back to the device, a number of obvious inefficiencies are introduced:

The most obvious inefficiency is latency. It takes time to send data to the cloud for manipulation and back to the device. Depending on how long it takes to process the data, milliseconds or seconds could be vital for various applications (think healthcare apps).

Moving data from the device to the cloud and back also presents opportunities for security breaches. Although hackers could still potentially access the device itself, keeping data on the device is the most secure method.

If the device relies on data storage and processing in another location, then internet connectivity is required to network with the data.

This internet connection also contributes to an unintended side-effect: battery depletion. Operating AI on the device removes the need for internet, excess power consumption, data fetching, and performance concerns.

How Mobile AI is Progressing

Keeping computation on the device, or “on the edge” of the network, is advantageous for many reasons and will likely become the status quo over the coming decade.

Today, CPU and GPU hardware are being supplemented with TPUs (Tensor Processing Units) capable of running AI at the edge.3 And Google’s TensorFlow lite platform (open-source software for machine learning) is currently running on more than 2 billion mobile devices globally.

Let’s cover some of the methods being used on the cutting edge of AI research bringing ML and AI to billions of devices today.

Federated Learning

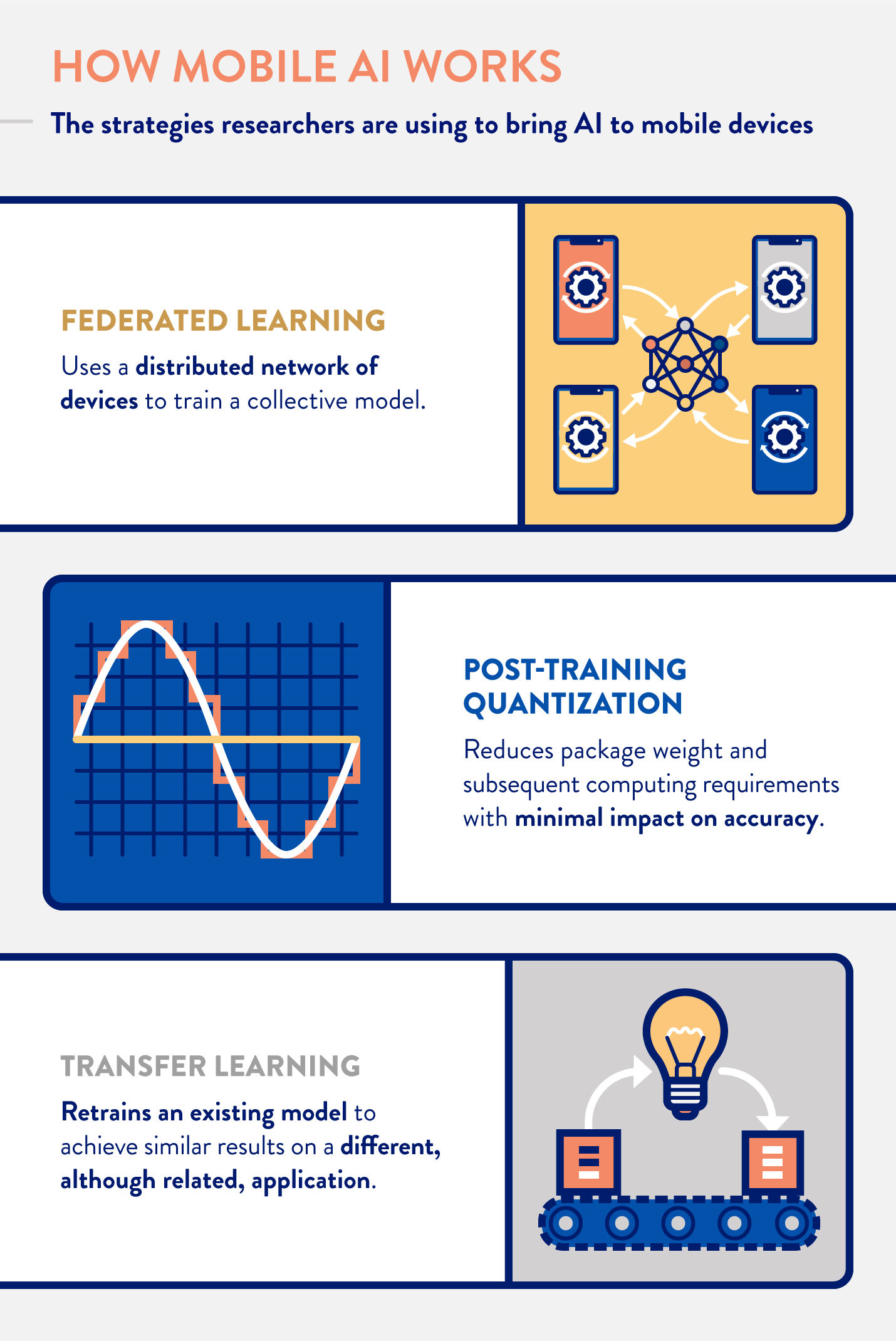

In 2015, research was published advocating for machine learning model training to take place on a distributed network of mobile devices instead of one model being trained on the cloud.4 This was the birth of federated learning, or the decentralized training of a model by multiple client applications in collaboration.

The way federated learning works is that a pre-trained model is downloaded from the cloud onto the device where it will continue to be trained by actual user data. Once the pre-trained model has been re-trained on the device, it is sent back to the cloud. Then, the models trained by each device are averaged out to result in a truly federated learning model.

Post-Training Quantization

Reducing the energy and computation required to train machine learning models is a major focus for AI researchers, especially for mobile applications. Because if we plan to operate AI on the edge, we must lighten the load placed on the hardware.

Post-training quantization basically rounds long-tail floating-point values to reduce the package “weight.” Despite some sacrifices in accuracy, the ability to execute more calculations in the same amount of time can balance any loss of precision. This method of minification allows the model to fit on the target device.5

Transfer Learning

Transfer learning is the process of taking the results of a solution to one problem and applying it to a similar problem. The model must be transposed, however, and will need to be retrained to solve the new problem.

Utilizing pre-trained models, transfer learning allows researchers and developers to build on top of existing AI. These models use a similar AI objective and retrain to achieve their goal.

A human gesture recognition model, for example, could be retrained to analyze the posturing and body language of animals. The model would be trained on labeled photos and videos of animals provided by developers until it has been sufficiently trained to predict results autonomously.

Machine Learning Models on Mobile

If you have Google Photos, Gmail, Uber, Airbnb, and other cutting-edge apps installed on your phone, you have experienced the benefits of mobile AI.

If while typing an email, for example, you were presented with an auto-complete option pulled from your usual lingo, you have experienced (and contributed to) federated learning.

The most common applications of machine learning have taken advantage of data that is commonly found on the internet, such as photos, text, audio, and more. Imagenet, for example, is a project that aims to provide ML developers with structured data for model training. Currently, they have over 14 million photos.6

Object Detection

Computer vision has been a top priority for many AI researchers. The ability for us to accurately see objects has evolved over hundreds of thousands of years as humans. When a computer “sees” an image, however, it is simply an array of data that tells the computer how to render the image pixel by pixel.

In order for computers to infer objects within images and videos, they must first be given large amounts of accurately labeled data. For example, in order for a computer to recognize a cat within a photo, it must first be given millions of specifically labeled cat photos.

Once well-fed with data, the machine learning model can compare what it has seen to the image being analyzed. Using Convolutional Neural Networks (CNNs), the computer will analyze a small batch of pixels, building context within the bigger picture. With each iteration, the computer calculates a probability for what the image is, based on its training.

If you have recently taken a picture of someone’s face you likely have seen object detection in action. The computer vision detects faces and will highlight the people within focus.7

Speech Recognition

Natural language processing (NLP), is another field of study within AI that attempts to understand spoken language. Similar to image classification and object detection, NLP models must be trained to parse each spoken word, but also comprehend the full sentence in context.

While static images use CNNs to analyze and detect the subject matter, audio and video use Recurrent Neural Networks (RNNs), due to their time-related nature. To understand language the computer must not only parse each individual word but understand each word’s context between the surrounding words.

Mobile technologies like Siri, Alexa, and Google Assistant are using natural language processing to recognize human speech as input to perform commands successfully. Mobile marketers can build on top of these platforms and technologies to let users interact with apps using voice commands.

Examples of AI Mobile Apps

1. Education: Teaching Students to Read Using NLP at Bolo

Google’s Bolo app is leading the way to boost literacy rates around the world.

Bolo uses speech recognition to provide tutoring to readers of all levels. Early studies revealed that 64% of readers that used the Bolo app showed improvement in reading proficiency in only 3 months.8

Bolo also employs gamification techniques to help students earn badges and stay engaged throughout the learning process.

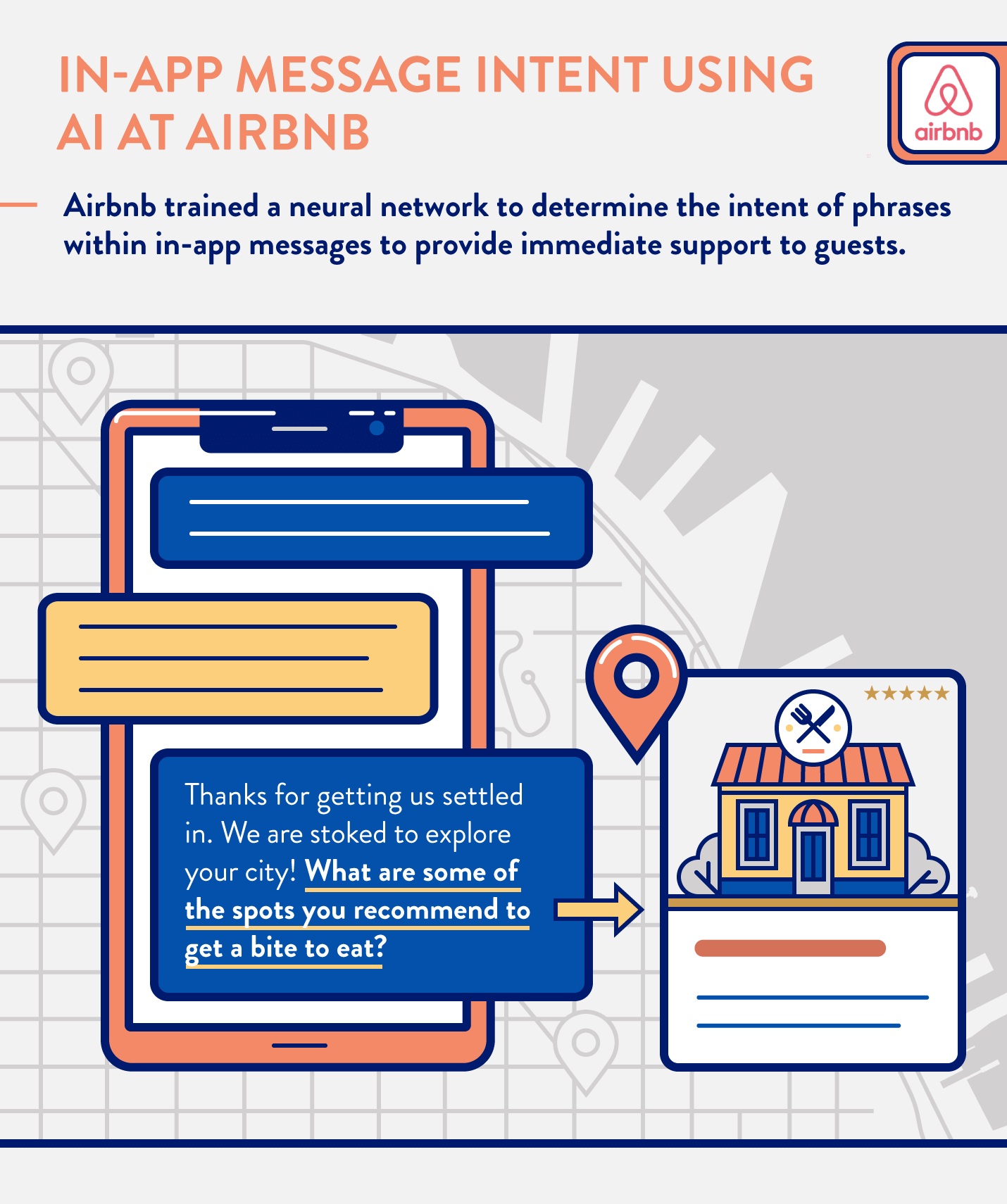

2. Travel: In-App Message Intent at Airbnb

Airbnb understands the benefits ML and AI can bring to the user experience within their app, which is why they trained a text classification model to better understand and solve for the intent of in-app messages between hosts and guests.

They started with unsupervised training, which looks at the entire message database for themes and topics regarding intent.

Then, researchers labeled data (supervised approach) and discovered that some messages have multi-intent, meaning more than one question can be asked in a single phrase.

Ultimately, AI researchers at Airbnb discovered that understanding the intent of key phrases was possible using CNNs. This allowed the model to find phrases even within the most confusing messages.9

3. Accessibility: Using Object Detection AI at Google

Given the immense amount of data required to train models to perform object detection in a general-purpose app, Google is at the top of a very short list of companies who could possibly build an app for visually impaired users to decipher their surroundings.

Lookout from Google allows users to receive audio feedback based on what objects the camera and other sensors detect.10

Leveraging Mobile AI in Your App

As ML and AI continue to use data to make predictions, it will likely become as commonplace in mobile development as the database itself. If you are interested in using ML and AI in your app, there are tools available from Google and Apple to start integrating today.11,12

If you want to harness the user data your app collects, consider integrating our intelligent mobile marketing platform. Whether you are looking for ways to segment your users, optimize your messaging, or gain a deeper understanding of your analytics, CleverTap’s sophisticated tools are built for managing billions of events at scale.

See how today’s top brands use CleverTap to drive long-term growth and retention

Subharun Mukherjee

Heads Cross-Functional Marketing.Expert in SaaS Product Marketing, CX & GTM strategies.

Free Customer Engagement Guides

Join our newsletter for actionable tips and proven strategies to grow your business and engage your customers.