For any platform, product, or technology to achieve widespread adoption and truly become mainstream, trust is the foundation. And when it comes to AI-powered cognitive systems, designed to mirror human-like intelligence, earning confidence becomes a critical imperative.

However, trust in AI is fragile. Customers today are highly aware of how their data is collected, processed, and used. They expect brands to deliver personalized experiences, but not at the cost of privacy, fairness, or security.

McKinsey & Company’s AI-powered tool, Lilli, for example, helps save 20% of time preparing for client meetings. Yet, on the other side of the spectrum, we’ve seen AI-powered chatbots swearing at customers and an AI assistant deleting a company’s database and even fabricating fake user profiles.

For brands, product leaders, and decision-makers, this creates a vital mandate:

AI systems must be designed not just to be powerful, but to be responsible, transparent, and secure by default.

Building this kind of trust requires more than just technical excellence; it demands cross-functional alignment across data science, engineering, product, marketing, and compliance teams. From security frameworks and ethical governance to explainability and regulatory adherence, the onus lies on brands to prove that their AI systems are designed with the right intent, integrity, and customer trust at the core.

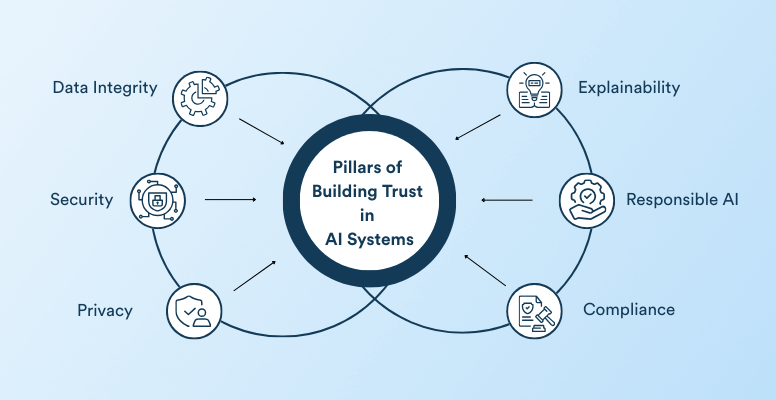

This is where the six foundational pillars come into play, forming a comprehensive framework for the adoption of trustworthy AI at scale.

Six Pillars of Trust in AI

AI undeniably enhances accuracy, creativity, and efficiency. But a single breach of trust, whether it is a data leak, a biased recommendation, or an opaque decision, can cause irreversible damage to both customer relationships and brand equity.To earn and sustain customer trust, brands must embed security, transparency, and responsibility at every layer of the AI lifecycle. At CleverTap, we believe these six foundational pillars form the bedrock of trustworthy AI adoption.

1. Data Integrity: Building Trust Through Quality and Reliability

AI systems are only as reliable and trustworthy as the data they learn from. The accuracy, completeness, and consistency of training and inference data directly influence model performance and downstream decisions. Poor data quality or a lack of governance can lead to hallucinations, biased recommendations, and unpredictable behaviors, undermining user trust and business credibility.

Ensuring data integrity is fundamental to building AI systems that make responsible, reliable, and explainable decisions.

Key Measures

- High-Quality, Accurate, and Complete Data

For the AI system to deliver results as intended, it is critical to ensure training and inference datasets are accurate, deduplicated, and representative of real-world scenarios. Implement data quality testing and validation processes at every stage of the data lifecycle to avoid skewed outputs and incomplete recommendations. Use automated data validation pipelines to flag anomalies, missing values, and outliers.

- Data Provenance and Lineage Tracking

Maintain detailed metadata records documenting the origin, transformation, and usage of all datasets. Track data lineage across the lifecycle, from collection and preprocessing to deployment and inference, to ensure traceability and accountability.

- Minimizing Hallucinations and Misinformation

Mitigating hallucinations, that is, instances where AI generates incorrect, misleading, or fabricated content, largely depends on the quality, consistency, and relevance of source data (along with the contextual clarity provided through prompts and instructions by the end-user). Measures include:

- Deploying model behavior monitoring frameworks to detect and minimize hallucinations, bias, and malicious content.

- Ensuring models reference validated data sources wherever possible.

- Regularly benchmarking outputs against trusted knowledge bases to maintain factual consistency.

- Bias Identification and Data Diversity

Use diverse, representative datasets that reflect real-world demographics, contexts, and scenarios to avoid skewed recommendations. Before the model training begins, establish fairness thresholds and audit the data to uncover sampling imbalances or systemic gaps that could introduce bias into AI outputs.

- Continuous Data Governance

Integrate data governance frameworks that define ownership, quality standards, retention policies, and access controls, such as Data Management Body of Knowledge (DAMA-DMBOK), Data Governance Institute (DGI) Framework, and others. Perform data freshness checks to ensure models are trained on up-to-date information. Establish clear escalation protocols when data anomalies or inconsistencies are detected.

For Brands Purchasing AI-Enabled Solutions

Trustworthy AI starts with trustworthy data. Before adopting third-party AI tools, brands should:

- Confirm the vendor follows strict data integrity frameworks and performs dataset quality checks regularly.

- Request documentation on data sources, preprocessing methods, and lineage to ensure reliability.

- Validate that models are trained on representative, bias-tested datasets.

- Check if vendors have hallucination detection and mitigation frameworks in place.

- Ensure they provide audit trails showing how training and inference data are collected, validated, and refreshed.

2. Security First: Building a Safe AI Ecosystem

AI systems process massive volumes of sensitive data and generate business-critical insights. Any compromise, such as a model breach, data exposure, or supply chain vulnerability, can have far-reaching reputational, regulatory, and financial consequences. Security must therefore be designed-in, not bolted on later.

Key Measures

- Secure AI Frameworks

Leverage industry-recognized frameworks to establish proactive defenses across the AI lifecycle. This includes NIST AI Risk Management Framework (AI RMF), Google’s Secure AI Framework (SAIF), CSA’s AI Model Risk Management Framework, and others.

- Defending Against LLM-Specific Threats

Large Language Models (LLMs) introduce unique vulnerabilities. Adopt a layered strategy to identify and defend against LLM security risks using:

- OWASP Top 10 for Large Language Models to mitigate prompt injection or jailbreaking attacks, data poisoning, supply chain vulnerabilities, and more.

- Threat Modeling (STRIDE and DREAD) to proactively identify threats and assess risks.

- Red Teaming and Sandboxing to simulate adversarial scenarios and isolate untrusted model outputs.

- Regular security audits to assess model security across training, deployment, and inference stages.

- Zero-Trust Architectures

A zero-trust architecture (ZTA) assumes no implicit trust anywhere in the ecosystem. Instead, it operates on the principle of “never trust, always verify,” meaning every request, model call, and API interaction must be explicitly authenticated, authorized, and continuously validated before granting access.

Implementing ZTA throughout the AI development and deployment lifecycle, including data and model security, application and API security, and automation and orchestration, secures the system at its most vulnerable points.

- Robust Data Sharing and Governance

Establish strict data-sharing and governance policies, including with external vendors, partners, and APIs:

- Classify data by sensitivity, define clear ownership, and establish access, retention, and deletion policies for all datasets.

- Mandate data encryption, masking, and logging for all data, including in external integrations.

- Require contractual guarantees from vendors on data handling, storage, retention, and regulatory adherence, especially when using third-party APIs or embeddings.

- Securing the Supply Chain

AI rarely operates in isolation. It interacts with databases, APIs, and internal and external applications, with each integration introducing potential vulnerabilities. Protocols like Model Context Protocol (MCP), Agent-to-Agent (A2A), and App-to-Content Protocol (ACP) enable interoperability but must be secured through encryption, token-based authentication, and continuous monitoring

The AI supply chain, including third-party APIs, plugins, and embeddings, is only as strong as its weakest link. A compromised integration can expose the entire system. Rigorous vendor vetting, code validation, and contractual safeguards are essential.

For Brands Purchasing AI-Enabled Solutions

For brands procuring AI-powered platforms or SaaS solutions, security must be a primary selection criterion, not an afterthought. Before onboarding a third-party AI-enabled tool, ensure the vendor:

- Adheres to recognized security frameworks, such as SAIF, NIST AI RMF, ISO/IEC 27001, and others.

- Provides clear disclosures on data usage, storage, and sharing practices.

- Maintains an audit trail for model updates, training data lineage, and inference logs.

- Has robust data governance policies in place, including encryption, anonymization, and access controls.

Furthermore, conduct a comprehensive vendor risk assessment, covering model provenance, compliance alignment, and incident response readiness. This approach ensures security-first procurement and minimizes hidden risks when adopting external AI capabilities.

3. Privacy by Design: Protecting Customer Trust at Its Core

Customers today are increasingly privacy-aware and are willing to share information only if they believe their data is secure, anonymized, and ethically used. For AI-enabled systems, adopting Privacy by Design (PbD) principles ensures compliance, customer confidence, and sustainable AI adoption.

Key Measures

- Data Governance

Collect only necessary data, classify it by sensitivity, and enforce access and retention policies. Use pseudonymization and anonymization techniques to remove personally identifiable information (PII) while maintaining analytical value.

- Privacy-Preserving Machine Learning

Use techniques like federated learning and differential privacy to protect sensitive data during training and inference:

- Federated Learning: Train models locally on user devices without moving raw data to centralized servers.

- Differential Privacy: Inject statistical “noise” into datasets or model outputs to protect individual identities while preserving patterns.

- Granular Consent Management

Provide users with clear dashboards to manage preferences and honor rights like data deletion and portability.

- Encryption & Access Controls

Protect sensitive data in transit, at rest, and during inference using robust encryption protocols. Enforce role-based permissions and maintain immutable access logs for every data request.

For Brands Purchasing AI-Enabled Tools

Privacy-first principles must extend to every vendor, platform, and AI-enabled solution you integrate. Before adopting third-party AI tools, brands should:

- Confirm the vendor follows Privacy by Design principles and complies with relevant privacy regulations (GDPR, DPDP, CCPA, LGPD).

- Request data handling disclosures covering collection practices, retention policies, anonymization techniques, and storage locations.

- Ensure federated learning, encryption, or differential privacy techniques are used where appropriate.

- Verify that vendors provide consent orchestration capabilities and honor user rights like data portability and deletion requests.

- Conduct privacy impact assessments (PIAs) for high-risk AI applications before integration.

By ensuring vendor-level alignment with privacy-first practices, brands can reduce compliance risks, safeguard customer data, and reinforce trust at scale.

4. Explainable AI: Transparency That Builds Confidence

The age of black-box models is over. Customers, regulators, and stakeholders are increasingly demanding visibility into AI-driven decision-making. Brands can no longer expect trust if they cannot explain why a model made a certain prediction or recommendation.

Explainability enables accountability and fosters confidence by helping stakeholders understand why predictions and recommendations are made.

Key Measures

- Explainable Outputs

Use Explainable AI (XAI) frameworks and toolkits, such as SHapley Additive exPlanations (SHAP), Local Interpretable Model-agnostic Explanations (LIME), IBM’s AI Explainability 360 (AIX360), and others, that make predictions interpretable, human-readable, and defensible.

- Decision Traceability & Provenance

Maintain immutable audit trails of data inputs, model parameters, and inference results. Track model provenance to capture when and how models are trained, deployed, or updated.

- Disclosure Protocols

Clearly disclose when AI is influencing outcomes, such as in personalization, recommendations, or eligibility scoring. Ensure the system offers user-friendly explanations for critical decisions such as customer segmentation, risk scoring, or loan approvals.

- Feedback-Driven Learning Loops

AI gets better with feedback, but only if done securely. By continuously tracking model performance and enabling controlled feedback loops, platforms can reduce bias and hallucinations while preventing malicious data from corrupting learning pipelines.

For Brands Purchasing AI-Enabled Solutions

When procuring third-party AI-powered platforms, brands must ensure that explainability is built into the design. Check whether the vendor:

- Supports explainable outputs via dashboards, APIs, or reports that detail how predictions are made.

- Provides clear model disclosures, including details about training data sources, feature importance, and decision logic.

- Ensures compatibility with XAI frameworks or APIs to integrate explainability into your existing governance workflows.

By enforcing these evaluation criteria, brands ensure that third-party AI solutions remain transparent, interpretable, and compliant, protecting both customer trust and regulatory standing.

5. Responsible AI: Governance, Ethics, and Fairness

AI systems have gone beyond automating routine tasks. Today, they influence decisions that affect customers, markets, and society. But AI is only as good as the data it learns from. Without the right ethical guardrails, governance structures, and bias detection mechanisms, AI models risk perpetuating unfairness, misinformation, exclusion, and discrimination, undermining both customer trust and brand integrity.

Responsible AI is about aligning AI outcomes with human values, regulatory expectations, and societal norms. It requires embedding ethical accountability into every layer of the AI lifecycle.

Key Measures

- AI Governance Board and Accountability Structures

Set up cross-functional AI Governance Boards with representation from engineering, legal, product, security, and compliance. The board should develop comprehensive AI usage policies and guidelines in line with relevant international frameworks, such as OECD AI Principles, NIST AI RMF, and the EU AI Act. It should also define role-based accountability for each stage of the AI lifecycle and those responsible for overseeing compliance, explainability, and risk mitigation.

- Bias and Fairness Controls

Unchecked data or model biases can lead to unintended discrimination and regulatory non-compliance. Use automated bias detection pipelines to continuously test training datasets, feature engineering, and outputs for systemic, latent, and intersectional biases. Introduce human-in-the-loop controls for high-stakes decision-making.

- Ethical Risk Assessments

Before deploying AI systems, conduct structured AI impact assessments and scenario testing to evaluate social, ethical, and operational risks. Review model interpretability, explainability, and intended purpose alignment with brand values and regulatory mandates.

- Transparency & User Disclosures

Trust improves when customers know when and how AI is being used. As a best practice, companies should disclose AI-driven decisions to end-users, especially in personalization, recommendations, and eligibility scoring.

- User-Controlled Autonomy

AI systems can execute tasks independently, but unrestricted autonomy is risky. Give end users the ability to choose the level of autonomy they are comfortable delegating to the AI system – from human-reviewed recommendations to fully automated decision-making.

For Brands Purchasing AI-Enabled Solutions

When adopting third-party AI solutions, brands must ensure vendors uphold the same ethical, governance, and fairness standards they demand internally. Before selecting an AI-enabled platform, brands should:

- Confirm the vendor has a documented Responsible AI framework with ethical usage policies and fairness commitments.

- Request bias and fairness assessment reports to validate model integrity across demographic groups.

- Verify that the vendor provides human oversight options for critical, high-impact AI decisions.

- Ensure the solution includes explainability layers outlining data sources, decision logic, and potential limitations.

- Include ethical usage clauses in contracts to define liability, ownership, and redress mechanisms for harmful AI outcomes.

By holding vendors accountable to responsible AI standards, brands can mitigate risks, avoid reputational damage, and strengthen customer trust.

6. Regulatory Compliance: Staying Ahead of the Curve

As AI adoption accelerates, regulatory frameworks around data privacy, algorithmic transparency, and automated decision-making are evolving at a rapid pace. From GDPR and CCPA to India’s DPDP Act and the EU AI Act, businesses face increasingly complex compliance obligations.

To succeed, organizations must adopt a compliance-by-design mindset, embedding governance throughout the AI lifecycle.

Key Measures

- Alignment with Global Standards: Map your AI systems to applicable regulations like GDPR, DPDP, and the EU AI Act from day one.

- Dynamic Compliance Monitoring: Continuously monitor regulatory updates and adapt policies in real time, especially for high-risk domains.

- Auditability and Governance: Maintain comprehensive audit trails covering training datasets, model parameters, inference decisions, and updates. Establish explainability layers to meet “Right to Explanation” obligations under GDPR and other emerging AI regulations.

- Cross-Border Data Compliance: Ensure lawful data transfers using Standard Contractual Clauses (SCCs), Binding Corporate Rules (BCRs), and jurisdiction-specific safeguards.

For Brands Purchasing AI-Enabled Solutions

When procuring third-party AI platforms, compliance cannot be assumed; it must be verified. Before onboarding AI-enabled solutions, brands should:

- Confirm the vendor complies with all relevant data privacy and AI-specific regulations (GDPR, DPDP, EU AI Act, and others).

- Request conformity assessments or certifications (such as ISO/IEC 27001, SOC 2, or upcoming EU AI Act declarations).

- Ensure the vendor provides audit trails for model updates, data sources, and inference logs to demonstrate compliance when required.

- Validate cross-border data transfer safeguards, particularly if data is processed outside regulated geographies.

- Seek disclosure reports detailing how the vendor ensures fairness, transparency, and risk classification for deployed models.

By demanding compliance-by-design assurances, brands mitigate regulatory exposure while reinforcing customer trust and market credibility.

The Future of AI is Built on Trust

As AI-powered systems increasingly influence customer engagement, personalization, and business decisions, trust has become the defining currency of adoption. For organizations, that means earning trust every single day: securing data, explaining decisions, governing responsibly, and putting customers first.

The companies that get this right will not just build better AI systems; they’ll build stronger, more enduring relationships.

At CleverTap, we’ve designed CleverAI with trust as a first-class principle. By prioritizing transparency, explainability, and security at the core, we empower brands to leverage AI confidently while respecting customer rights.

Sagar Hatekar

Leads product managementExpert in Marketing Analytics & Engagement platforms.

Free Customer Engagement Guides

Join our newsletter for actionable tips and proven strategies to grow your business and engage your customers.